mirror of

https://github.com/sun-guannan/CapCutAPI.git

synced 2025-11-25 03:15:00 +08:00

Compare commits

91 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

2eb6c8d761 | ||

|

|

b83a013a42 | ||

|

|

b74247e60c | ||

|

|

13a57ba0f2 | ||

|

|

ff61c38114 | ||

|

|

10ade1b57a | ||

|

|

e5e74e275a | ||

|

|

59c04e8f77 | ||

|

|

2cff5d2e20 | ||

|

|

aaf196f926 | ||

|

|

cfde6304f9 | ||

|

|

80c03c00ea | ||

|

|

b97931392c | ||

|

|

66fb7d066b | ||

|

|

4377d92548 | ||

|

|

77d2c9e108 | ||

|

|

d904c78187 | ||

|

|

04e01449e3 | ||

|

|

aba58dd845 | ||

|

|

83c33c15c4 | ||

|

|

f0ca6afe5a | ||

|

|

76a5bc3e15 | ||

|

|

d68eb4ddb7 | ||

|

|

2e82bb719c | ||

|

|

cc7cba9af6 | ||

|

|

fa16b9d310 | ||

|

|

0052c44e88 | ||

|

|

f45b2fe314 | ||

|

|

55c28c6b4a | ||

|

|

cce0500bc4 | ||

|

|

9bc28ebab6 | ||

|

|

fc53398c27 | ||

|

|

29fc42bfc4 | ||

|

|

341fc022a9 | ||

|

|

195a927f04 | ||

|

|

0a05b6487b | ||

|

|

c94b11a1f5 | ||

|

|

8134ed9489 | ||

|

|

2dd2ff69bd | ||

|

|

4a77eea949 | ||

|

|

fef03abb47 | ||

|

|

93c1c76e5b | ||

|

|

7d9105e2e0 | ||

|

|

f24d1d2d44 | ||

|

|

af1faf7e77 | ||

|

|

6d6932ab2d | ||

|

|

74327da3f4 | ||

|

|

78c69abfd3 | ||

|

|

8300dab49b | ||

|

|

007bc7d83d | ||

|

|

fc0c05498e | ||

|

|

7881ef0e87 | ||

|

|

57a7bdcce2 | ||

|

|

5c2d17fbad | ||

|

|

8fd71e07e9 | ||

|

|

c814db83db | ||

|

|

10035b22ac | ||

|

|

358b896e9d | ||

|

|

2fd0233682 | ||

|

|

57855f3a92 | ||

|

|

0c1bc2924a | ||

|

|

5a9479fdee | ||

|

|

878b6ef6dc | ||

|

|

4e1c593922 | ||

|

|

586185551f | ||

|

|

a79bd85ff4 | ||

|

|

5b21133535 | ||

|

|

93f43e339a | ||

|

|

f0c5254a04 | ||

|

|

1774884241 | ||

|

|

8ca273dfb7 | ||

|

|

fec8156784 | ||

|

|

196775faf8 | ||

|

|

ae9e72a429 | ||

|

|

b5ffbff3bc | ||

|

|

50f7b3fe09 | ||

|

|

9fbe4dc4fe | ||

|

|

62ff49af74 | ||

|

|

369fa2d45e | ||

|

|

cd89301572 | ||

|

|

7b22159ca1 | ||

|

|

fa2cf1ea4d | ||

|

|

e31a9776c2 | ||

|

|

f8bdab82a8 | ||

|

|

cedc0aa414 | ||

|

|

6e71134748 | ||

|

|

cc596541cd | ||

|

|

f27ba69d7d | ||

|

|

3ba187d4d5 | ||

|

|

3f587bc6e7 | ||

|

|

21580db6f0 |

254

MCP_Documentation_English.md

Normal file

254

MCP_Documentation_English.md

Normal file

@@ -0,0 +1,254 @@

|

||||

# CapCut API MCP Server Documentation

|

||||

|

||||

## Overview

|

||||

|

||||

The CapCut API MCP Server is a video editing service based on the Model Context Protocol (MCP), providing complete CapCut video editing functionality interfaces. Through the MCP protocol, you can easily integrate professional-grade video editing capabilities into various applications.

|

||||

|

||||

## Features

|

||||

|

||||

### 🎬 Core Capabilities

|

||||

- **Draft Management**: Create, save, and manage video projects

|

||||

- **Multimedia Support**: Video, audio, image, and text processing

|

||||

- **Advanced Effects**: Effects, animations, transitions, and filters

|

||||

- **Precise Control**: Timeline, keyframes, and layer management

|

||||

|

||||

### 🛠️ Available Tools (11 Tools)

|

||||

|

||||

| Tool Name | Description | Key Parameters |

|

||||

|-----------|-------------|----------------|

|

||||

| `create_draft` | Create new video draft project | width, height |

|

||||

| `add_text` | Add text elements | text, font_size, color, shadow, background |

|

||||

| `add_video` | Add video track | video_url, start, end, transform, volume |

|

||||

| `add_audio` | Add audio track | audio_url, volume, speed, effects |

|

||||

| `add_image` | Add image assets | image_url, transform, animation, transition |

|

||||

| `add_subtitle` | Add subtitle files | srt_path, font_style, position |

|

||||

| `add_effect` | Add visual effects | effect_type, parameters, duration |

|

||||

| `add_sticker` | Add sticker elements | resource_id, position, scale, rotation |

|

||||

| `add_video_keyframe` | Add keyframe animations | property_types, times, values |

|

||||

| `get_video_duration` | Get video duration | video_url |

|

||||

| `save_draft` | Save draft project | draft_id |

|

||||

|

||||

## Installation & Setup

|

||||

|

||||

### Requirements

|

||||

- Python 3.10+

|

||||

- CapCut Application (macOS/Windows)

|

||||

- MCP Client Support

|

||||

|

||||

### Dependencies Installation

|

||||

```bash

|

||||

# Create virtual environment

|

||||

python3.10 -m venv venv-mcp

|

||||

source venv-mcp/bin/activate # macOS/Linux

|

||||

# or venv-mcp\Scripts\activate # Windows

|

||||

|

||||

# Install dependencies

|

||||

pip install -r requirements-mcp.txt

|

||||

```

|

||||

|

||||

### MCP Configuration

|

||||

Create or update `mcp_config.json` file:

|

||||

|

||||

```json

|

||||

{

|

||||

"mcpServers": {

|

||||

"capcut-api": {

|

||||

"command": "python3.10",

|

||||

"args": ["mcp_server.py"],

|

||||

"cwd": "/path/to/CapCutAPI-dev",

|

||||

"env": {

|

||||

"PYTHONPATH": "/path/to/CapCutAPI-dev"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Usage Guide

|

||||

|

||||

### Basic Workflow

|

||||

|

||||

#### 1. Create Draft

|

||||

```python

|

||||

# Create 1080x1920 portrait project

|

||||

result = mcp_client.call_tool("create_draft", {

|

||||

"width": 1080,

|

||||

"height": 1920

|

||||

})

|

||||

draft_id = result["draft_id"]

|

||||

```

|

||||

|

||||

#### 2. Add Content

|

||||

```python

|

||||

# Add title text

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "My Video Title",

|

||||

"start": 0,

|

||||

"end": 5,

|

||||

"draft_id": draft_id,

|

||||

"font_size": 48,

|

||||

"font_color": "#FFFFFF"

|

||||

})

|

||||

|

||||

# Add background video

|

||||

mcp_client.call_tool("add_video", {

|

||||

"video_url": "https://example.com/video.mp4",

|

||||

"draft_id": draft_id,

|

||||

"start": 0,

|

||||

"end": 10,

|

||||

"volume": 0.8

|

||||

})

|

||||

```

|

||||

|

||||

#### 3. Save Project

|

||||

```python

|

||||

# Save draft

|

||||

result = mcp_client.call_tool("save_draft", {

|

||||

"draft_id": draft_id

|

||||

})

|

||||

```

|

||||

|

||||

### Advanced Features

|

||||

|

||||

#### Text Styling

|

||||

```python

|

||||

# Text with shadow and background

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "Advanced Text Effects",

|

||||

"draft_id": draft_id,

|

||||

"font_size": 56,

|

||||

"font_color": "#FFD700",

|

||||

"shadow_enabled": True,

|

||||

"shadow_color": "#000000",

|

||||

"shadow_alpha": 0.8,

|

||||

"background_color": "#1E1E1E",

|

||||

"background_alpha": 0.7,

|

||||

"background_round_radius": 15

|

||||

})

|

||||

```

|

||||

|

||||

#### Keyframe Animation

|

||||

```python

|

||||

# Scale and opacity animation

|

||||

mcp_client.call_tool("add_video_keyframe", {

|

||||

"draft_id": draft_id,

|

||||

"track_name": "video_main",

|

||||

"property_types": ["scale_x", "scale_y", "alpha"],

|

||||

"times": [0, 2, 4],

|

||||

"values": ["1.0", "1.5", "0.5"]

|

||||

})

|

||||

```

|

||||

|

||||

#### Multi-Style Text

|

||||

```python

|

||||

# Different colored text segments

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "Colorful Text Effect",

|

||||

"draft_id": draft_id,

|

||||

"text_styles": [

|

||||

{"start": 0, "end": 2, "font_color": "#FF0000"},

|

||||

{"start": 2, "end": 4, "font_color": "#00FF00"}

|

||||

]

|

||||

})

|

||||

```

|

||||

|

||||

## Testing & Validation

|

||||

|

||||

### Using Test Client

|

||||

```bash

|

||||

# Run test client

|

||||

python test_mcp_client.py

|

||||

```

|

||||

|

||||

### Functionality Checklist

|

||||

- [ ] Server starts successfully

|

||||

- [ ] Tool list retrieval works

|

||||

- [ ] Draft creation functionality

|

||||

- [ ] Text addition functionality

|

||||

- [ ] Video/audio/image addition

|

||||

- [ ] Effects and animation functionality

|

||||

- [ ] Draft saving functionality

|

||||

|

||||

## Troubleshooting

|

||||

|

||||

### Common Issues

|

||||

|

||||

#### 1. "CapCut modules not available"

|

||||

**Solution**:

|

||||

- Confirm CapCut application is installed

|

||||

- Check Python path configuration

|

||||

- Verify dependency package installation

|

||||

|

||||

#### 2. Server startup failure

|

||||

**Solution**:

|

||||

- Check virtual environment activation

|

||||

- Verify configuration file paths

|

||||

- Review error logs

|

||||

|

||||

#### 3. Tool call errors

|

||||

**Solution**:

|

||||

- Check parameter format

|

||||

- Verify media file URLs

|

||||

- Confirm time range settings

|

||||

|

||||

### Debug Mode

|

||||

```bash

|

||||

# Enable verbose logging

|

||||

export DEBUG=1

|

||||

python mcp_server.py

|

||||

```

|

||||

|

||||

## Best Practices

|

||||

|

||||

### Performance Optimization

|

||||

1. **Media Files**: Use compressed formats, avoid oversized files

|

||||

2. **Time Management**: Plan element timelines reasonably, avoid overlaps

|

||||

3. **Memory Usage**: Save drafts promptly, clean temporary files

|

||||

|

||||

### Error Handling

|

||||

1. **Parameter Validation**: Check required parameters before calling

|

||||

2. **Exception Catching**: Handle network and file errors

|

||||

3. **Retry Mechanism**: Retry on temporary failures

|

||||

|

||||

## API Reference

|

||||

|

||||

### Common Parameters

|

||||

- `draft_id`: Unique draft identifier

|

||||

- `start/end`: Time range (seconds)

|

||||

- `width/height`: Project dimensions

|

||||

- `transform_x/y`: Position coordinates

|

||||

- `scale_x/y`: Scale ratios

|

||||

|

||||

### Response Format

|

||||

```json

|

||||

{

|

||||

"success": true,

|

||||

"result": {

|

||||

"draft_id": "dfd_cat_xxx",

|

||||

"draft_url": "https://..."

|

||||

},

|

||||

"features_used": {

|

||||

"shadow": false,

|

||||

"background": false,

|

||||

"multi_style": false

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## Changelog

|

||||

|

||||

### v1.0.0

|

||||

- Initial release

|

||||

- Support for 11 core tools

|

||||

- Complete MCP protocol implementation

|

||||

|

||||

## Technical Support

|

||||

|

||||

For questions or suggestions, please contact us through:

|

||||

- GitHub Issues

|

||||

- Technical Documentation

|

||||

- Community Forums

|

||||

|

||||

---

|

||||

|

||||

*This documentation is continuously updated. Please follow the latest version.*

|

||||

254

MCP_文档_中文.md

Normal file

254

MCP_文档_中文.md

Normal file

@@ -0,0 +1,254 @@

|

||||

# CapCut API MCP 服务器使用文档

|

||||

|

||||

## 概述

|

||||

|

||||

CapCut API MCP 服务器是一个基于 Model Context Protocol (MCP) 的视频编辑服务,提供了完整的 CapCut 视频编辑功能接口。通过 MCP 协议,您可以轻松地在各种应用中集成专业级的视频编辑能力。

|

||||

|

||||

## 功能特性

|

||||

|

||||

### 🎬 核心功能

|

||||

- **草稿管理**: 创建、保存和管理视频项目

|

||||

- **多媒体支持**: 视频、音频、图片、文本处理

|

||||

- **高级效果**: 特效、动画、转场、滤镜

|

||||

- **精确控制**: 时间轴、关键帧、图层管理

|

||||

|

||||

### 🛠️ 可用工具 (11个)

|

||||

|

||||

| 工具名称 | 功能描述 | 主要参数 |

|

||||

|---------|----------|----------|

|

||||

| `create_draft` | 创建新的视频草稿项目 | width, height |

|

||||

| `add_text` | 添加文字元素 | text, font_size, color, shadow, background |

|

||||

| `add_video` | 添加视频轨道 | video_url, start, end, transform, volume |

|

||||

| `add_audio` | 添加音频轨道 | audio_url, volume, speed, effects |

|

||||

| `add_image` | 添加图片素材 | image_url, transform, animation, transition |

|

||||

| `add_subtitle` | 添加字幕文件 | srt_path, font_style, position |

|

||||

| `add_effect` | 添加视觉特效 | effect_type, parameters, duration |

|

||||

| `add_sticker` | 添加贴纸元素 | resource_id, position, scale, rotation |

|

||||

| `add_video_keyframe` | 添加关键帧动画 | property_types, times, values |

|

||||

| `get_video_duration` | 获取视频时长 | video_url |

|

||||

| `save_draft` | 保存草稿项目 | draft_id |

|

||||

|

||||

## 安装配置

|

||||

|

||||

### 环境要求

|

||||

- Python 3.10+

|

||||

- CapCut 应用 (macOS/Windows)

|

||||

- MCP 客户端支持

|

||||

|

||||

### 依赖安装

|

||||

```bash

|

||||

# 创建虚拟环境

|

||||

python3.10 -m venv venv-mcp

|

||||

source venv-mcp/bin/activate # macOS/Linux

|

||||

# 或 venv-mcp\Scripts\activate # Windows

|

||||

|

||||

# 安装依赖

|

||||

pip install -r requirements-mcp.txt

|

||||

```

|

||||

|

||||

### MCP 配置

|

||||

创建或更新 `mcp_config.json` 文件:

|

||||

|

||||

```json

|

||||

{

|

||||

"mcpServers": {

|

||||

"capcut-api": {

|

||||

"command": "python3.10",

|

||||

"args": ["mcp_server.py"],

|

||||

"cwd": "/path/to/CapCutAPI-dev",

|

||||

"env": {

|

||||

"PYTHONPATH": "/path/to/CapCutAPI-dev"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## 使用指南

|

||||

|

||||

### 基础工作流程

|

||||

|

||||

#### 1. 创建草稿

|

||||

```python

|

||||

# 创建 1080x1920 竖屏项目

|

||||

result = mcp_client.call_tool("create_draft", {

|

||||

"width": 1080,

|

||||

"height": 1920

|

||||

})

|

||||

draft_id = result["draft_id"]

|

||||

```

|

||||

|

||||

#### 2. 添加内容

|

||||

```python

|

||||

# 添加标题文字

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "我的视频标题",

|

||||

"start": 0,

|

||||

"end": 5,

|

||||

"draft_id": draft_id,

|

||||

"font_size": 48,

|

||||

"font_color": "#FFFFFF"

|

||||

})

|

||||

|

||||

# 添加背景视频

|

||||

mcp_client.call_tool("add_video", {

|

||||

"video_url": "https://example.com/video.mp4",

|

||||

"draft_id": draft_id,

|

||||

"start": 0,

|

||||

"end": 10,

|

||||

"volume": 0.8

|

||||

})

|

||||

```

|

||||

|

||||

#### 3. 保存项目

|

||||

```python

|

||||

# 保存草稿

|

||||

result = mcp_client.call_tool("save_draft", {

|

||||

"draft_id": draft_id

|

||||

})

|

||||

```

|

||||

|

||||

### 高级功能示例

|

||||

|

||||

#### 文字样式设置

|

||||

```python

|

||||

# 带阴影和背景的文字

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "高级文字效果",

|

||||

"draft_id": draft_id,

|

||||

"font_size": 56,

|

||||

"font_color": "#FFD700",

|

||||

"shadow_enabled": True,

|

||||

"shadow_color": "#000000",

|

||||

"shadow_alpha": 0.8,

|

||||

"background_color": "#1E1E1E",

|

||||

"background_alpha": 0.7,

|

||||

"background_round_radius": 15

|

||||

})

|

||||

```

|

||||

|

||||

#### 关键帧动画

|

||||

```python

|

||||

# 缩放和透明度动画

|

||||

mcp_client.call_tool("add_video_keyframe", {

|

||||

"draft_id": draft_id,

|

||||

"track_name": "video_main",

|

||||

"property_types": ["scale_x", "scale_y", "alpha"],

|

||||

"times": [0, 2, 4],

|

||||

"values": ["1.0", "1.5", "0.5"]

|

||||

})

|

||||

```

|

||||

|

||||

#### 多样式文本

|

||||

```python

|

||||

# 不同颜色的文字段落

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "彩色文字效果",

|

||||

"draft_id": draft_id,

|

||||

"text_styles": [

|

||||

{"start": 0, "end": 2, "font_color": "#FF0000"},

|

||||

{"start": 2, "end": 4, "font_color": "#00FF00"}

|

||||

]

|

||||

})

|

||||

```

|

||||

|

||||

## 测试验证

|

||||

|

||||

### 使用测试客户端

|

||||

```bash

|

||||

# 运行测试客户端

|

||||

python test_mcp_client.py

|

||||

```

|

||||

|

||||

### 功能验证清单

|

||||

- [ ] 服务器启动成功

|

||||

- [ ] 工具列表获取正常

|

||||

- [ ] 草稿创建功能

|

||||

- [ ] 文本添加功能

|

||||

- [ ] 视频/音频/图片添加

|

||||

- [ ] 特效和动画功能

|

||||

- [ ] 草稿保存功能

|

||||

|

||||

## 故障排除

|

||||

|

||||

### 常见问题

|

||||

|

||||

#### 1. "CapCut modules not available"

|

||||

**解决方案**:

|

||||

- 确认 CapCut 应用已安装

|

||||

- 检查 Python 路径配置

|

||||

- 验证依赖包安装

|

||||

|

||||

#### 2. 服务器启动失败

|

||||

**解决方案**:

|

||||

- 检查虚拟环境激活

|

||||

- 验证配置文件路径

|

||||

- 查看错误日志

|

||||

|

||||

#### 3. 工具调用错误

|

||||

**解决方案**:

|

||||

- 检查参数格式

|

||||

- 验证媒体文件URL

|

||||

- 确认时间范围设置

|

||||

|

||||

### 调试模式

|

||||

```bash

|

||||

# 启用详细日志

|

||||

export DEBUG=1

|

||||

python mcp_server.py

|

||||

```

|

||||

|

||||

## 最佳实践

|

||||

|

||||

### 性能优化

|

||||

1. **媒体文件**: 使用压缩格式,避免过大文件

|

||||

2. **时间管理**: 合理规划元素时间轴,避免重叠

|

||||

3. **内存使用**: 及时保存草稿,清理临时文件

|

||||

|

||||

### 错误处理

|

||||

1. **参数验证**: 调用前检查必需参数

|

||||

2. **异常捕获**: 处理网络和文件错误

|

||||

3. **重试机制**: 对临时失败进行重试

|

||||

|

||||

## API 参考

|

||||

|

||||

### 通用参数

|

||||

- `draft_id`: 草稿唯一标识符

|

||||

- `start/end`: 时间范围(秒)

|

||||

- `width/height`: 项目尺寸

|

||||

- `transform_x/y`: 位置坐标

|

||||

- `scale_x/y`: 缩放比例

|

||||

|

||||

### 返回格式

|

||||

```json

|

||||

{

|

||||

"success": true,

|

||||

"result": {

|

||||

"draft_id": "dfd_cat_xxx",

|

||||

"draft_url": "https://..."

|

||||

},

|

||||

"features_used": {

|

||||

"shadow": false,

|

||||

"background": false,

|

||||

"multi_style": false

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## 更新日志

|

||||

|

||||

### v1.0.0

|

||||

- 初始版本发布

|

||||

- 支持 11 个核心工具

|

||||

- 完整的 MCP 协议实现

|

||||

|

||||

## 技术支持

|

||||

|

||||

如有问题或建议,请通过以下方式联系:

|

||||

- GitHub Issues

|

||||

- 技术文档

|

||||

- 社区论坛

|

||||

|

||||

---

|

||||

|

||||

*本文档持续更新,请关注最新版本。*

|

||||

325

README-zh.md

325

README-zh.md

@@ -1,19 +1,37 @@

|

||||

# CapCutAPI

|

||||

# 通过CapCutAPI连接AI生成的一切 [在线体验](https://www.capcutapi.top)

|

||||

|

||||

轻量、灵活、易上手的剪映/CapCutAPI工具,构建全自动化视频剪辑/混剪流水线。

|

||||

|

||||

直接体验:https://www.capcutapi.top

|

||||

<div align="center">

|

||||

|

||||

```

|

||||

👏👏👏👏 庆祝github 600星,送出价值6000点不记名云渲染券:17740F41-5ECB-44B1-AAAE-1C458A0EFF43

|

||||

👏👏👏👏 庆祝github 800星,送出价值8000点不记名云渲染券:040346B5-8D8F-459E-8EE7-332C0B827117

|

||||

```

|

||||

</div>

|

||||

|

||||

## 项目概览

|

||||

|

||||

**CapCutAPI** 是一款强大的云端 剪辑 API,它赋予您对 AI 生成素材(包括图片、音频、视频和文字)的精确控制权。

|

||||

它提供了精确的编辑能力来拼接原始的 AI 输出,例如给视频变速或将图片镜像反转。这种能力有效地解决了 AI 生成的结果缺乏精确控制,难以复制的问题,让您能够轻松地将创意想法转化为精致的视频。

|

||||

所有这些功能均旨在对标剪映软件的功能,确保您在云端也能获得熟悉且高效的剪辑体验。

|

||||

|

||||

### 核心优势

|

||||

|

||||

1. 通过API的方式,提供对标剪映/CapCut的剪辑能力。

|

||||

|

||||

2. 可以在网页实时预览剪辑结果,无需下载,极大方便工作流开发。

|

||||

|

||||

3. 可以下载剪辑结果,并导入到剪映/CapCut中二次编辑。

|

||||

|

||||

4. 可以利用API将剪辑结果生成视频,实现全云端操作。

|

||||

|

||||

## 效果展示

|

||||

|

||||

<div align="center">

|

||||

|

||||

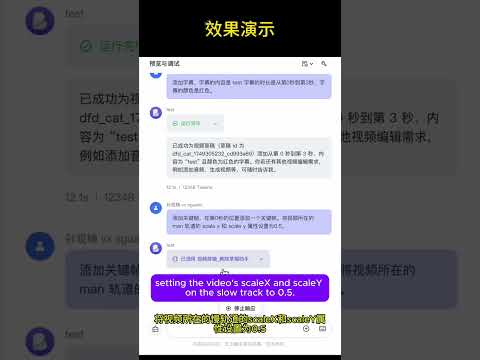

## 效果演示

|

||||

**MCP,创建属于自己的剪辑Agent**

|

||||

|

||||

[](https://www.youtube.com/watch?v=fBqy6WFC78E)

|

||||

|

||||

**通过工具,将AI生成的图片,视频组合起来**

|

||||

**通过CapCutAPI,将AI生成的图片,视频组合起来**

|

||||

|

||||

[](https://www.youtube.com/watch?v=1zmQWt13Dx0)

|

||||

|

||||

@@ -21,154 +39,259 @@

|

||||

|

||||

[](https://www.youtube.com/watch?v=rGNLE_slAJ8)

|

||||

|

||||

## 项目功能

|

||||

</div>

|

||||

|

||||

本项目是一个基于Python的剪映/CapCut处理工具,提供以下核心功能:

|

||||

## 核心功能

|

||||

|

||||

### 核心功能

|

||||

|

||||

- **草稿文件管理**:创建、读取、修改和保存剪映/CapCut草稿文件

|

||||

- **素材处理**:支持视频、音频、图片、文本、贴纸等多种素材的添加和编辑

|

||||

- **特效应用**:支持添加转场、滤镜、蒙版、动画等多种特效

|

||||

- **API服务**:提供HTTP API接口,支持远程调用和自动化处理

|

||||

- **AI集成**:集成多种AI服务,支持智能生成字幕、文本和图像

|

||||

| 功能模块 | API | MCP 协议 | 描述 |

|

||||

|---------|----------|----------|------|

|

||||

| **草稿管理** | ✅ | ✅ | 创建、保存剪映/CapCut草稿文件 |

|

||||

| **视频处理** | ✅ | ✅ | 多格式视频导入、剪辑、转场、特效 |

|

||||

| **音频编辑** | ✅ | ✅ | 音频轨道、音量控制、音效处理 |

|

||||

| **图像处理** | ✅ | ✅ | 图片导入、动画、蒙版、滤镜 |

|

||||

| **文本编辑** | ✅ | ✅ | 多样式文本、阴影、背景、动画 |

|

||||

| **字幕系统** | ✅ | ✅ | SRT 字幕导入、样式设置、时间同步 |

|

||||

| **特效引擎** | ✅ | ✅ | 视觉特效、滤镜、转场动画 |

|

||||

| **贴纸系统** | ✅ | ✅ | 贴纸素材、位置控制、动画效果 |

|

||||

| **关键帧** | ✅ | ✅ | 属性动画、时间轴控制、缓动函数 |

|

||||

| **媒体分析** | ✅ | ✅ | 视频时长获取、格式检测 |

|

||||

|

||||

### 主要API接口

|

||||

## 快速开始

|

||||

|

||||

- `/create_draft`创建草稿

|

||||

- `/add_video`:添加视频素材到草稿

|

||||

- `/add_audio`:添加音频素材到草稿

|

||||

- `/add_image`:添加图片素材到草稿

|

||||

- `/add_text`:添加文本素材到草稿

|

||||

- `/add_subtitle`:添加字幕到草稿

|

||||

- `/add_effect`:添加特效到素材

|

||||

- `/add_sticker`:添加贴纸到草稿

|

||||

- `/save_draft`:保存草稿文件

|

||||

### 1. 系统要求

|

||||

|

||||

## 配置说明

|

||||

- Python 3.10+

|

||||

- 剪映 或 CapCut 国际版

|

||||

- FFmpeg

|

||||

|

||||

### 配置文件

|

||||

|

||||

项目支持通过配置文件进行自定义设置。要使用配置文件:

|

||||

|

||||

1. 复制`config.json.example`为`config.json`

|

||||

2. 根据需要修改配置项

|

||||

### 2. 安装部署

|

||||

|

||||

```bash

|

||||

# 1. 克隆项目

|

||||

git clone https://github.com/sun-guannan/CapCutAPI.git

|

||||

cd CapCutAPI

|

||||

|

||||

# 2. 创建虚拟环境 (推荐)

|

||||

python -m venv venv-capcut

|

||||

source venv-capcut/bin/activate # Linux/macOS

|

||||

# 或 venv-capcut\Scripts\activate # Windows

|

||||

|

||||

# 3. 安装依赖

|

||||

pip install -r requirements.txt # HTTP API 基础依赖

|

||||

pip install -r requirements-mcp.txt # MCP 协议支持 (可选)

|

||||

|

||||

# 4. 配置文件

|

||||

cp config.json.example config.json

|

||||

# 根据需要编辑 config.json

|

||||

```

|

||||

|

||||

### 环境配置

|

||||

|

||||

#### ffmpeg

|

||||

|

||||

本项目依赖于ffmpeg,您需要确保系统中已安装ffmpeg,并且将其添加到系统的环境变量中。

|

||||

|

||||

#### Python 环境

|

||||

|

||||

本项目需要 Python 3.8.20 版本,请确保您的系统已安装正确版本的 Python。

|

||||

|

||||

#### 安装依赖

|

||||

|

||||

安装项目所需的依赖包:

|

||||

### 3. 启动服务

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

python capcut_server.py # 启动HTTP API服务器, 默认端口: 9001

|

||||

|

||||

python mcp_server.py # 启动 MCP 协议服务,支持 stdio 通信

|

||||

```

|

||||

|

||||

### 运行服务器

|

||||

## MCP 集成指南

|

||||

|

||||

完成配置和环境设置后,执行以下命令启动服务器:

|

||||

[MCP 文档](./MCP_文档_中文.md) • [MCP English Guide](./MCP_Documentation_English.md)

|

||||

|

||||

### 1. 客户端配置

|

||||

|

||||

创建或更新 `mcp_config.json` 配置文件:

|

||||

|

||||

```json

|

||||

{

|

||||

"mcpServers": {

|

||||

"capcut-api": {

|

||||

"command": "python3",

|

||||

"args": ["mcp_server.py"],

|

||||

"cwd": "/path/to/CapCutAPI",

|

||||

"env": {

|

||||

"PYTHONPATH": "/path/to/CapCutAPI",

|

||||

"DEBUG": "0"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 2. 连接测试

|

||||

|

||||

```bash

|

||||

python capcut_server.py

|

||||

```

|

||||

# 测试 MCP 连接

|

||||

python test_mcp_client.py

|

||||

|

||||

服务器启动后,您可以通过 API 接口访问相关功能。

|

||||

# 预期输出

|

||||

✅ MCP 服务器启动成功

|

||||

✅ 获取到 11 个可用工具

|

||||

✅ 草稿创建测试通过

|

||||

```

|

||||

|

||||

## 使用示例

|

||||

|

||||

### 添加视频

|

||||

### 1. API 示例

|

||||

添加视频素材

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

# 添加背景视频

|

||||

response = requests.post("http://localhost:9001/add_video", json={

|

||||

"video_url": "http://example.com/video.mp4",

|

||||

"video_url": "https://example.com/background.mp4",

|

||||

"start": 0,

|

||||

"end": 10,

|

||||

"end": 10

|

||||

"volume": 0.8,

|

||||

"transition": "fade_in"

|

||||

})

|

||||

|

||||

print(f"视频添加结果: {response.json()}")

|

||||

```

|

||||

|

||||

创建样式文本

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

# 添加标题文字

|

||||

response = requests.post("http://localhost:9001/add_text", json={

|

||||

"text": "欢迎使用 CapCutAPI",

|

||||

"start": 0,

|

||||

"end": 5,

|

||||

"font": "思源黑体",

|

||||

"font_color": "#FFD700",

|

||||

"font_size": 48,

|

||||

"shadow_enabled": True,

|

||||

"background_color": "#000000"

|

||||

})

|

||||

|

||||

print(f"文本添加结果: {response.json()}")

|

||||

```

|

||||

|

||||

可以在`example.py`文件中获取更多示例。

|

||||

|

||||

### 2. MCP 协议示例

|

||||

|

||||

完整工作流程

|

||||

|

||||

```python

|

||||

# 1. 创建新项目

|

||||

draft = mcp_client.call_tool("create_draft", {

|

||||

"width": 1080,

|

||||

"height": 1920

|

||||

})

|

||||

draft_id = draft["result"]["draft_id"]

|

||||

|

||||

print(response.json())

|

||||

```

|

||||

|

||||

### 添加文本

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

response = requests.post("http://localhost:9001/add_text", json={

|

||||

"text": "你好,世界!",

|

||||

# 2. 添加背景视频

|

||||

mcp_client.call_tool("add_video", {

|

||||

"video_url": "https://example.com/bg.mp4",

|

||||

"draft_id": draft_id,

|

||||

"start": 0,

|

||||

"end": 3,

|

||||

"font": "思源黑体",

|

||||

"font_color": "#FF0000",

|

||||

"font_size": 30.0

|

||||

"end": 10,

|

||||

"volume": 0.6

|

||||

})

|

||||

|

||||

print(response.json())

|

||||

```

|

||||

# 3. 添加标题文字

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "AI 驱动的视频制作",

|

||||

"draft_id": draft_id,

|

||||

"start": 1,

|

||||

"end": 6,

|

||||

"font_size": 56,

|

||||

"shadow_enabled": True,

|

||||

"background_color": "#1E1E1E"

|

||||

})

|

||||

|

||||

### 保存草稿

|

||||

# 4. 添加关键帧动画

|

||||

mcp_client.call_tool("add_video_keyframe", {

|

||||

"draft_id": draft_id,

|

||||

"track_name": "main",

|

||||

"property_types": ["scale_x", "scale_y", "alpha"],

|

||||

"times": [0, 2, 4],

|

||||

"values": ["1.0", "1.2", "0.8"]

|

||||

})

|

||||

|

||||

# 5. 保存项目

|

||||

result = mcp_client.call_tool("save_draft", {

|

||||

"draft_id": draft_id

|

||||

})

|

||||

|

||||

print(f"项目已保存: {result['result']['draft_url']}")

|

||||

```

|

||||

高级文本效果

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

response = requests.post("http://localhost:9001/save_draft", json={

|

||||

"draft_id": "123456",

|

||||

"draft_folder":"your capcut draft folder"

|

||||

# 多样式彩色文本

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "彩色文字效果展示",

|

||||

"draft_id": draft_id,

|

||||

"start": 2,

|

||||

"end": 8,

|

||||

"font_size": 42,

|

||||

"shadow_enabled": True,

|

||||

"shadow_color": "#FFFFFF",

|

||||

"background_alpha": 0.8,

|

||||

"background_round_radius": 20,

|

||||

"text_styles": [

|

||||

{"start": 0, "end": 2, "font_color": "#FF6B6B"},

|

||||

{"start": 2, "end": 4, "font_color": "#4ECDC4"},

|

||||

{"start": 4, "end": 6, "font_color": "#45B7D1"}

|

||||

]

|

||||

})

|

||||

|

||||

print(response.json())

|

||||

```

|

||||

也可以用 REST Client 的 ```rest_client_test.http``` 进行http测试,只需要安装对应的IDE插件

|

||||

|

||||

### 复制草稿到剪映/capcut草稿路径

|

||||

调用`save_draft`会在服务器当前目录下生成一个`dfd_`开头的文件夹,将他复制到剪映/CapCut草稿目录,即可看到生成的草稿

|

||||

### 3. 下载草稿

|

||||

|

||||

调用 `save_draft` 会在`capcut_server.py`当前目录下生成一个 `dfd_` 开头的文件夹,将其复制到剪映/CapCut 草稿目录,即可在应用中看到生成的草稿。

|

||||

|

||||

### 更多示例

|

||||

请参考项目的`example.py`文件,其中包含了更多的使用示例,如添加音频、添加特效等。

|

||||

## 模版

|

||||

我们汇总了一些模版,放在`pattern`文件夹下。

|

||||

|

||||

## 社区与支持

|

||||

|

||||

## 项目特点

|

||||

我们欢迎各种形式的贡献!我们的迭代规则:

|

||||

|

||||

- 禁止直接向main提交pr

|

||||

- 可以向dev分支提交pr

|

||||

- 每周一从dev合并到main分支,并发版

|

||||

|

||||

- **跨平台支持**:同时支持剪映和CapCut国际版

|

||||

- **自动化处理**:支持批量处理和自动化工作流

|

||||

- **丰富的API**:提供全面的API接口,方便集成到其他系统

|

||||

- **灵活的配置**:通过配置文件实现灵活的功能定制

|

||||

- **AI增强**:集成多种AI服务,提升视频制作效率

|

||||

|

||||

## 进群交流

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

- 反馈问题

|

||||

- 功能建议

|

||||

- 最新消息

|

||||

|

||||

## 合作

|

||||

- 你想要利用这个API批量制作**出海**视频吗?

|

||||

我提供免费的咨询服务,帮助你利用这个API制作。

|

||||

相应的,我要将工作流代码放到这个项目里公开出来。

|

||||

### 🤝 合作机会

|

||||

|

||||

- 有兴趣加入我们?

|

||||

我们的目标是提供稳定可靠的视频剪辑工具,方便融合AI生成的图片/视频/语音。

|

||||

如果你有兴趣,可以先从将工程里的中文翻译成英文开始!提交pr,我会看到。

|

||||

更深入的,还有MCP剪辑Agent, web剪辑端,云渲染这三个模块代码还没有开源出来。

|

||||

- **出海视频制作**: 想要利用这个API批量制作出海视频吗?我提供免费的咨询服务,帮助你利用这个API制作。相应的,我要将制作的工作流模板放到这个项目中的template目录中**开源**出来。

|

||||

|

||||

- 联系方式

|

||||

微信:sguann

|

||||

抖音:剪映草稿助手

|

||||

- **加入我们**: 我们的目标是提供稳定可靠的视频剪辑工具,方便融合AI生成的图片/视频/语音。如果你有兴趣,可以先从将工程里的中文翻译成英文开始!提交pr,我会看到。更深入的,还有MCP剪辑Agent, web剪辑端,云渲染这三个模块代码还没有开源出来。

|

||||

|

||||

- **联系方式**:

|

||||

- 微信:sguann

|

||||

- 抖音:剪映草稿助手

|

||||

|

||||

|

||||

## 📈 Star History

|

||||

|

||||

<div align="center">

|

||||

|

||||

[](https://www.star-history.com/#sun-guannan/CapCutAPI&Date)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

</div>

|

||||

|

||||

*Made with ❤️ by the CapCutAPI Community*

|

||||

|

||||

</div>

|

||||

|

||||

313

README.md

313

README.md

@@ -1,18 +1,36 @@

|

||||

# CapCutAPI

|

||||

|

||||

Open source CapCut API tool.

|

||||

# Connect AI generates via CapCutAPI [Try it online](https://www.capcutapi.top)

|

||||

|

||||

Try It: https://www.capcutapi.top

|

||||

## Project Overview

|

||||

**CapCutAPI** is a powerful editing API that empowers you to take full control of your AI-generated assets, including images, audio, video, and text. It provides the precision needed to refine and customize raw AI output, such as adjusting video speed or mirroring an image. This capability effectively solves the lack of control often found in AI video generation, allowing you to easily transform your creative ideas into polished videos.

|

||||

|

||||

[中文说明](https://github.com/sun-guannan/CapCutAPI/blob/main/README-zh.md)

|

||||

All these features are designed to mirror the functionalities of the CapCut software, ensuring a familiar and efficient editing experience in the cloud.

|

||||

|

||||

## Gallery

|

||||

Enjoy It! 😀😀😀

|

||||

|

||||

**MCP agent**

|

||||

[中文说明](README-zh.md)

|

||||

|

||||

### Advantages

|

||||

|

||||

1. **API-Powered Editing:** Access all CapCut/Jianying editing features, including multi-track editing and keyframe animation, through a powerful API.

|

||||

|

||||

2. **Real-Time Cloud Preview:** Instantly preview your edits on a webpage without downloads, dramatically improving your workflow.

|

||||

|

||||

3. **Flexible Local Editing:** Export projects as drafts to import into CapCut or Jianying for further refinement.

|

||||

|

||||

4. **Automated Cloud Generation:** Use the API to render and generate final videos directly in the cloud.

|

||||

|

||||

## Demos

|

||||

|

||||

<div align="center">

|

||||

|

||||

**MCP, create your own editing Agent**

|

||||

|

||||

[](https://www.youtube.com/watch?v=fBqy6WFC78E)

|

||||

|

||||

**Connect AI generated via CapCutAPI**

|

||||

**Combine AI-generated images and videos using CapCutAPI**

|

||||

|

||||

[More](pattern)

|

||||

|

||||

[](https://www.youtube.com/watch?v=1zmQWt13Dx0)

|

||||

|

||||

@@ -20,132 +38,251 @@ Try It: https://www.capcutapi.top

|

||||

|

||||

[](https://www.youtube.com/watch?v=rGNLE_slAJ8)

|

||||

|

||||

## Project Features

|

||||

|

||||

This project is a Python-based CapCut processing tool that offers the following core functionalities:

|

||||

</div>

|

||||

|

||||

### Core Features

|

||||

## Key Features

|

||||

|

||||

- **Draft File Management**: Create, read, modify, and save CapCut draft files

|

||||

- **Material Processing**: Support adding and editing various materials such as videos, audios, images, texts, stickers, etc.

|

||||

- **Effect Application**: Support adding multiple effects like transitions, filters, masks, animations, etc.

|

||||

- **API Service**: Provide HTTP API interfaces to support remote calls and automated processing

|

||||

- **AI Integration**: Integrate multiple AI services to support intelligent generation of subtitles, texts, and images

|

||||

| Feature Module | API | MCP Protocol | Description |

|

||||

|---------|----------|----------|------|

|

||||

| **Draft Management** | ✅ | ✅ | Create and save Jianying/CapCut draft files |

|

||||

| **Video Processing** | ✅ | ✅ | Import, clip, transition, and apply effects to multiple video formats |

|

||||

| **Audio Editing** | ✅ | ✅ | Audio tracks, volume control, sound effects processing |

|

||||

| **Image Processing** | ✅ | ✅ | Image import, animation, masks, filters |

|

||||

| **Text Editing** | ✅ | ✅ | Multi-style text, shadows, backgrounds, animations |

|

||||

| **Subtitle System** | ✅ | ✅ | SRT subtitle import, style settings, time synchronization |

|

||||

| **Effects Engine** | ✅ | ✅ | Visual effects, filters, transition animations |

|

||||

| **Sticker System** | ✅ | ✅ | Sticker assets, position control, animation effects |

|

||||

| **Keyframes** | ✅ | ✅ | Property animation, timeline control, easing functions |

|

||||

| **Media Analysis** | ✅ | ✅ | Get video duration, detect format |

|

||||

|

||||

### Main API Interfaces

|

||||

## Quick Start

|

||||

|

||||

- `/create_draft`: Create a draft

|

||||

- `/add_video`: Add video material to the draft

|

||||

- `/add_audio`: Add audio material to the draft

|

||||

- `/add_image`: Add image material to the draft

|

||||

- `/add_text`: Add text material to the draft

|

||||

- `/add_subtitle`: Add subtitles to the draft

|

||||

- `/add_effect`: Add effects to materials

|

||||

- `/add_sticker`: Add stickers to the draft

|

||||

- `/save_draft`: Save the draft file

|

||||

### 1\. System Requirements

|

||||

|

||||

## Configuration Instructions

|

||||

- Python 3.10+

|

||||

- Jianying or CapCut International version

|

||||

- FFmpeg

|

||||

|

||||

### Configuration File

|

||||

|

||||

The project supports custom settings through a configuration file. To use the configuration file:

|

||||

|

||||

1. Copy `config.json.example` to `config.json`

|

||||

2. Modify the configuration items as needed

|

||||

### 2\. Installation and Deployment

|

||||

|

||||

```bash

|

||||

# 1. Clone the project

|

||||

git clone https://github.com/sun-guannan/CapCutAPI.git

|

||||

cd CapCutAPI

|

||||

|

||||

# 2. Create a virtual environment (recommended)

|

||||

python -m venv venv-capcut

|

||||

source venv-capcut/bin/activate # Linux/macOS

|

||||

# or venv-capcut\Scripts\activate # Windows

|

||||

|

||||

# 3. Install dependencies

|

||||

pip install -r requirements.txt # HTTP API basic dependencies

|

||||

pip install -r requirements-mcp.txt # MCP protocol support (optional)

|

||||

|

||||

# 4. Configuration file

|

||||

cp config.json.example config.json

|

||||

# Edit config.json as needed

|

||||

```

|

||||

|

||||

### Environment Configuration

|

||||

|

||||

#### ffmpeg

|

||||

|

||||

This project depends on ffmpeg. You need to ensure that ffmpeg is installed on your system and added to the system's environment variables.

|

||||

|

||||

#### Python Environment

|

||||

|

||||

This project requires Python version 3.8.20. Please ensure that the correct version of Python is installed on your system.

|

||||

|

||||

#### Install Dependencies

|

||||

|

||||

Install the required dependency packages for the project:

|

||||

### 3\. Start the service

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

python capcut_server.py # Start the HTTP API server, default port: 9001

|

||||

|

||||

python mcp_server.py # Start the MCP protocol service, supports stdio communication

|

||||

```

|

||||

|

||||

### Run the Server

|

||||

## MCP Integration Guide

|

||||

|

||||

After completing the configuration and environment setup, execute the following command to start the server:

|

||||

[MCP 中文文档](https://www.google.com/search?q=./MCP_%E6%96%87%E6%A1%A3_%E4%B8%AD%E6%96%87.md) • [MCP English Guide](https://www.google.com/search?q=./MCP_Documentation_English.md)

|

||||

|

||||

### 1\. Client Configuration

|

||||

|

||||

Create or update the `mcp_config.json` configuration file:

|

||||

|

||||

```json

|

||||

{

|

||||

"mcpServers": {

|

||||

"capcut-api": {

|

||||

"command": "python3",

|

||||

"args": ["mcp_server.py"],

|

||||

"cwd": "/path/to/CapCutAPI",

|

||||

"env": {

|

||||

"PYTHONPATH": "/path/to/CapCutAPI",

|

||||

"DEBUG": "0"

|

||||

}

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 2\. Connection Test

|

||||

|

||||

```bash

|

||||

python capcut_server.py

|

||||

```

|

||||

# Test MCP connection

|

||||

python test_mcp_client.py

|

||||

|

||||

Once the server is started, you can access the related functions through the API interfaces.

|

||||

# Expected output

|

||||

✅ MCP server started successfully

|

||||

✅ Got 11 available tools

|

||||

✅ Draft creation test passed

|

||||

```

|

||||

|

||||

## Usage Examples

|

||||

|

||||

### Adding a Video

|

||||

### 1\. API Example

|

||||

|

||||

Add video material

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

# Add background video

|

||||

response = requests.post("http://localhost:9001/add_video", json={

|

||||

"video_url": "http://example.com/video.mp4",

|

||||

"video_url": "https://example.com/background.mp4",

|

||||

"start": 0,

|

||||

"end": 10,

|

||||

"end": 10

|

||||

"volume": 0.8,

|

||||

"transition": "fade_in"

|

||||

})

|

||||

|

||||

print(f"Video addition result: {response.json()}")

|

||||

```

|

||||

|

||||

Create stylized text

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

# Add title text

|

||||

response = requests.post("http://localhost:9001/add_text", json={

|

||||

"text": "Welcome to CapCutAPI",

|

||||

"start": 0,

|

||||

"end": 5,

|

||||

"font": "Source Han Sans",read

|

||||

"font_color": "#FFD700",

|

||||

"font_size": 48,

|

||||

"shadow_enabled": True,

|

||||

"background_color": "#000000"

|

||||

})

|

||||

|

||||

print(f"Text addition result: {response.json()}")

|

||||

```

|

||||

|

||||

More examples can be found in the `example.py` file.

|

||||

|

||||

### 2\. MCP Protocol Example

|

||||

|

||||

Complete workflow

|

||||

|

||||

```python

|

||||

# 1. Create a new project

|

||||

draft = mcp_client.call_tool("create_draft", {

|

||||

"width": 1080,

|

||||

"height": 1920

|

||||

})

|

||||

draft_id = draft["result"]["draft_id"]

|

||||

|

||||

print(response.json())

|

||||

```

|

||||

|

||||

### Adding Text

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

response = requests.post("http://localhost:9001/add_text", json={

|

||||

"text": "Hello, World!",

|

||||

# 2. Add background video

|

||||

mcp_client.call_tool("add_video", {

|

||||

"video_url": "https://example.com/bg.mp4",

|

||||

"draft_id": draft_id,

|

||||

"start": 0,

|

||||

"end": 3,

|

||||

"font": "ZY_Courage",

|

||||

"font_color": "#FF0000",

|

||||

"font_size": 30.0

|

||||

"end": 10,

|

||||

"volume": 0.6

|

||||

})

|

||||

|

||||

print(response.json())

|

||||

# 3. Add title text

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "AI-Driven Video Production",

|

||||

"draft_id": draft_id,

|

||||

"start": 1,

|

||||

"end": 6,

|

||||

"font_size": 56,

|

||||

"shadow_enabled": True,

|

||||

"background_color": "#1E1E1E"

|

||||

})

|

||||

|

||||

# 4. Add keyframe animation

|

||||

mcp_client.call_tool("add_video_keyframe", {

|

||||

"draft_id": draft_id,

|

||||

"track_name": "main",

|

||||

"property_types": ["scale_x", "scale_y", "alpha"],

|

||||

"times": [0, 2, 4],

|

||||

"values": ["1.0", "1.2", "0.8"]

|

||||

})

|

||||

|

||||

# 5. Save the project

|

||||

result = mcp_client.call_tool("save_draft", {

|

||||

"draft_id": draft_id

|

||||

})

|

||||

|

||||

print(f"Project saved: {result['result']['draft_url']}")

|

||||

```

|

||||

|

||||

### Saving a Draft

|

||||

Advanced text effects

|

||||

|

||||

```python

|

||||

import requests

|

||||

|

||||

response = requests.post("http://localhost:9001/save_draft", json={

|

||||

"draft_id": "123456",

|

||||

"draft_folder": "your capcut draft folder"

|

||||

# Multi-style colored text

|

||||

mcp_client.call_tool("add_text", {

|

||||

"text": "Colored text effect demonstration",

|

||||

"draft_id": draft_id,

|

||||

"start": 2,

|

||||

"end": 8,

|

||||

"font_size": 42,

|

||||

"shadow_enabled": True,

|

||||

"shadow_color": "#FFFFFF",

|

||||

"background_alpha": 0.8,

|

||||

"background_round_radius": 20,

|

||||

"text_styles": [

|

||||

{"start": 0, "end": 2, "font_color": "#FF6B6B"},

|

||||

{"start": 2, "end": 4, "font_color": "#4ECDC4"},

|

||||

{"start": 4, "end": 6, "font_color": "#45B7D1"}

|

||||

]

|

||||

})

|

||||

|

||||

print(response.json())

|

||||

```

|

||||

You can also use the ```rest_client_test.http``` file of the REST Client for HTTP testing. Just need to install the corresponding IDE plugin

|

||||

|

||||

### Copying the Draft to CapCut Draft Path

|

||||

### 3\. Downloading Drafts

|

||||

|

||||

Calling `save_draft` will generate a folder starting with `dfd_` in the current directory of the server. Copy this folder to the CapCut draft directory, and you will be able to see the generated draft.

|

||||

Calling `save_draft` will generate a folder starting with `dfd_` in the current directory of `capcut_server.py`. Copy this to the CapCut/Jianying drafts directory to see the generated draft in the application.

|

||||

|

||||

### More Examples

|

||||

## Pattern

|

||||

|

||||

Please refer to the `example.py` file in the project, which contains more usage examples such as adding audio and effects.

|

||||

You can find a lot of pattern in the `pattern` directory.

|

||||

|

||||

## Project Features

|

||||

## Community & Support

|

||||

|

||||

- **Cross-platform Support**: Supports both CapCut China version and CapCut International version

|

||||

- **Automated Processing**: Supports batch processing and automated workflows

|

||||

- **Rich APIs**: Provides comprehensive API interfaces for easy integration into other systems

|

||||

- **Flexible Configuration**: Achieve flexible function customization through configuration files

|

||||

- **AI Enhancement**: Integrate multiple AI services to improve video production efficiency

|

||||

We welcome contributions of all forms\! Our iteration rules are:

|

||||

|

||||

- No direct PRs to main

|

||||

- PRs can be submitted to the dev branch

|

||||

- Merges from dev to main and releases will happen every Monday

|

||||

|

||||

## Contact Us

|

||||

|

||||

### 🤝 Collaboration

|

||||

|

||||

- **Video Production**: Want to use this API for batch production of videos with AIGC?

|

||||

|

||||

- **Join us**: Our goal is to provide a stable and reliable video editing tool that integrates well with AI-generated images, videos, and audio. If you are interested, submit a PR and I'll see it. For more in-depth involvement, the code for the MCP Editing Agent, web-based editing client, and cloud rendering modules has not been open-sourced yet.

|

||||

|

||||

**Contact**: abelchrisnic@gmail.com

|

||||

|

||||

## 📈 Star History

|

||||

|

||||

<div align="center">

|

||||

|

||||

[](https://www.star-history.com/#sun-guannan/CapCutAPI&Date)

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

[](https://mseep.ai/app/69c38d28-a97c-4397-849d-c3e3d241b800)

|

||||

</div>

|

||||

|

||||

*Made with ❤️ by the CapCutAPI Community*

|

||||

|

||||

@@ -1,12 +1,13 @@

|

||||

from pyJianYingDraft import trange, Video_scene_effect_type, Video_character_effect_type, CapCut_Video_scene_effect_type, CapCut_Video_character_effect_type, exceptions

|

||||

import pyJianYingDraft as draft

|

||||

from typing import Optional, Dict, List, Union

|

||||

from typing import Optional, Dict, List, Union, Literal

|

||||

from create_draft import get_or_create_draft

|

||||

from util import generate_draft_url

|

||||

from settings import IS_CAPCUT_ENV

|

||||

|

||||

def add_effect_impl(

|

||||

effect_type: str, # Changed to string type

|

||||

effect_category: Literal["scene", "character"],

|

||||

start: float = 0,

|

||||

end: float = 3.0,

|

||||

draft_id: Optional[str] = None,

|

||||

@@ -18,6 +19,7 @@ def add_effect_impl(

|

||||

"""

|

||||

Add an effect to the specified draft

|

||||

:param effect_type: Effect type name, will be matched from Video_scene_effect_type or Video_character_effect_type

|

||||

:param effect_category: Effect category, "scene" or "character", default "scene"

|

||||

:param start: Start time (seconds), default 0

|

||||

:param end: End time (seconds), default 3 seconds

|

||||

:param draft_id: Draft ID, if None or corresponding zip file not found, a new draft will be created

|

||||

@@ -38,20 +40,35 @@ def add_effect_impl(

|

||||

duration = end - start

|

||||

t_range = trange(f"{start}s", f"{duration}s")

|

||||

|

||||

# Dynamically get effect type object

|

||||

# Select the corresponding effect type based on effect category and environment

|

||||

effect_enum = None

|

||||

if IS_CAPCUT_ENV:

|

||||

# If in CapCut environment, use CapCut effects

|

||||

effect_enum = CapCut_Video_scene_effect_type[effect_type]

|

||||

if effect_enum is None:

|

||||

effect_enum = CapCut_Video_character_effect_type[effect_type]

|

||||

if effect_category == "scene":

|

||||

try:

|

||||

effect_enum = CapCut_Video_scene_effect_type[effect_type]

|

||||

except:

|

||||

effect_enum = None

|

||||

elif effect_category == "character":

|

||||

try:

|

||||

effect_enum = CapCut_Video_character_effect_type[effect_type]

|

||||

except:

|

||||

effect_enum = None

|

||||

else:

|

||||

# Default to using JianYing effects

|

||||

effect_enum = Video_scene_effect_type[effect_type]

|

||||

if effect_enum is None:

|

||||

effect_enum = Video_character_effect_type[effect_type]

|

||||

if effect_category == "scene":

|

||||

try:

|

||||

effect_enum = Video_scene_effect_type[effect_type]

|

||||

except:

|

||||

effect_enum = None

|

||||

elif effect_category == "character":

|

||||

try:

|

||||

effect_enum = Video_character_effect_type[effect_type]

|

||||

except:

|

||||

effect_enum = None

|

||||

|

||||

if effect_enum is None:

|

||||

raise ValueError(f"Unknown effect type: {effect_type}")

|

||||

raise ValueError(f"Unknown {effect_category} effect type: {effect_type}")

|

||||

|

||||

# Add effect track (only when track doesn't exist)

|

||||

if track_name is not None:

|

||||

|

||||

@@ -80,7 +80,7 @@ def add_subtitle_impl(

|

||||

raise Exception(f"Failed to download subtitle file: {str(e)}")

|

||||

elif os.path.isfile(srt_path): # Check if it's a file

|

||||

try:

|

||||

with open(srt_path, 'r', encoding='utf-8') as f:

|

||||

with open(srt_path, 'r', encoding='utf-8-sig') as f:

|

||||

srt_content = f.read()

|

||||

except Exception as e:

|

||||

raise Exception(f"Failed to read local subtitle file: {str(e)}")

|

||||

|

||||

@@ -11,44 +11,44 @@ def add_text_impl(

|

||||

text: str,

|

||||

start: float,

|

||||

end: float,

|

||||

draft_id: str = None,

|

||||

draft_id: str | None = None, # Python 3.10+ 新语法

|

||||

transform_y: float = -0.8,

|

||||

transform_x: float = 0,

|

||||

font: str = "文轩体", # Wenxuan Font

|

||||

font: Optional[str] = None,

|

||||

font_color: str = "#ffffff",

|

||||

font_size: float = 8.0,

|

||||

track_name: str = "text_main",

|

||||

vertical: bool = False, # Whether to display vertically

|

||||

font_alpha: float = 1.0, # Transparency, range 0.0-1.0

|

||||

vertical: bool = False,

|

||||

font_alpha: float = 1.0,

|

||||

# Border parameters

|

||||

border_alpha: float = 1.0,

|

||||

border_color: str = "#000000",

|

||||

border_width: float = 0.0, # Default no border display

|

||||

border_width: float = 0.0,

|

||||

# Background parameters

|

||||

background_color: str = "#000000",

|

||||

background_style: int = 1,

|

||||

background_alpha: float = 0.0, # Default no background display

|

||||

background_round_radius: float = 0.0, # 背景圆角半径,范围0.0-1.0

|

||||

background_height: float = 0.14, # 背景高度,范围0.0-1.0

|

||||

background_width: float = 0.14, # 背景宽度,范围0.0-1.0

|

||||

background_horizontal_offset: float = 0.5, # 背景水平偏移,范围0.0-1.0

|

||||

background_vertical_offset: float = 0.5, # 背景垂直偏移,范围0.0-1.0

|

||||

# 阴影参数

|

||||

shadow_enabled: bool = False, # 是否启用阴影

|

||||

shadow_alpha: float = 0.9, # 阴影透明度,范围0.0-1.0

|

||||

shadow_angle: float = -45.0, # 阴影角度,范围-180.0-180.0

|

||||

shadow_color: str = "#000000", # 阴影颜色

|

||||

shadow_distance: float = 5.0, # 阴影距离

|

||||

shadow_smoothing: float = 0.15, # 阴影平滑度,范围0.0-1.0

|

||||

background_alpha: float = 0.0,

|

||||

background_round_radius: float = 0.0,

|

||||

background_height: float = 0.14,

|

||||

background_width: float = 0.14,

|

||||

background_horizontal_offset: float = 0.5,

|

||||

background_vertical_offset: float = 0.5,

|

||||

# Shadow parameters

|

||||

shadow_enabled: bool = False,

|

||||

shadow_alpha: float = 0.9,

|

||||

shadow_angle: float = -45.0,

|

||||

shadow_color: str = "#000000",

|

||||

shadow_distance: float = 5.0,

|

||||

shadow_smoothing: float = 0.15,

|

||||

# Bubble effect

|

||||

bubble_effect_id: Optional[str] = None,

|

||||

bubble_resource_id: Optional[str] = None,

|

||||

bubble_effect_id: str | None = None,

|

||||

bubble_resource_id: str | None = None,

|

||||

# Text effect

|

||||

effect_effect_id: Optional[str] = None,

|

||||

intro_animation: Optional[str] = None, # Intro animation type

|

||||

intro_duration: float = 0.5, # Intro animation duration (seconds), default 0.5 seconds

|

||||

outro_animation: Optional[str] = None, # Outro animation type

|

||||

outro_duration: float = 0.5, # Outro animation duration (seconds), default 0.5 seconds

|

||||

effect_effect_id: str | None = None,

|

||||

intro_animation: str | None = None,

|

||||

intro_duration: float = 0.5,

|

||||

outro_animation: str | None = None,

|

||||

outro_duration: float = 0.5,

|

||||

width: int = 1080,

|

||||

height: int = 1920,

|

||||

fixed_width: float = -1, # Text fixed width ratio, default -1 means not fixed

|

||||

@@ -102,11 +102,14 @@ def add_text_impl(

|

||||

:return: Updated draft information

|

||||

"""

|

||||

# Validate if font is in Font_type

|

||||

try:

|

||||

font_type = getattr(Font_type, font)

|

||||

except:

|

||||

available_fonts = [attr for attr in dir(Font_type) if not attr.startswith('_')]

|

||||

raise ValueError(f"Unsupported font: {font}, please use one of the fonts in Font_type: {available_fonts}")

|

||||

if font is None:

|

||||

font_type = None

|

||||

else:

|

||||

try:

|

||||

font_type = getattr(Font_type, font)

|

||||

except:

|

||||

available_fonts = [attr for attr in dir(Font_type) if not attr.startswith('_')]

|

||||

raise ValueError(f"Unsupported font: {font}, please use one of the fonts in Font_type: {available_fonts}")

|

||||

|

||||

# Validate alpha value range

|

||||

if not 0.0 <= font_alpha <= 1.0:

|

||||

|

||||

@@ -233,7 +233,7 @@ def add_subtitle():

|

||||

time_offset = data.get('time_offset', 0.0) # Default 0 seconds

|

||||

|

||||

# Font style parameters

|

||||

font = data.get('font', None)

|

||||

font = data.get('font', "思源粗宋")

|

||||

font_size = data.get('font_size', 5.0) # Default size 5.0

|

||||

bold = data.get('bold', False) # Default not bold

|

||||

italic = data.get('italic', False) # Default not italic

|

||||

@@ -642,6 +642,7 @@ def add_effect():

|

||||

# Get required parameters

|

||||

effect_type = data.get('effect_type') # Effect type name, will match from Video_scene_effect_type or Video_character_effect_type

|

||||

start = data.get('start', 0) # Start time (seconds), default 0

|

||||

effect_category = data.get('effect_category', "scene") # Effect category, "scene" or "character", default "scene"

|

||||

end = data.get('end', 3.0) # End time (seconds), default 3 seconds

|

||||

draft_id = data.get('draft_id') # Draft ID, if None or corresponding zip file not found, create new draft

|

||||

track_name = data.get('track_name', "effect_01") # Track name, can be omitted when there is only one effect track

|

||||

@@ -665,6 +666,7 @@ def add_effect():

|

||||

# Call add_effect_impl method

|

||||

draft_result = add_effect_impl(

|

||||

effect_type=effect_type,

|

||||

effect_category=effect_category,

|

||||

start=start,

|

||||

end=end,

|

||||

draft_id=draft_id,

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

{

|

||||

"is_capcut_env": true, // Whether to use CapCut environment (true) or JianYing environment (false)

|

||||

"draft_domain": "https://www.install-ai-guider.top", // Base domain for draft operations

|

||||

"draft_domain": "https://www.capcutapi.top", // Base domain for draft operations

|

||||

"port": 9001, // Port number for the local server

|

||||

"preview_router": "/draft/downloader", // Router path for preview functionality

|

||||

"is_upload_draft": false, // Whether to upload drafts to remote storage

|

||||

|

||||

131

example.py

131

example.py

@@ -9,6 +9,8 @@ import threading

|

||||

from pyJianYingDraft.text_segment import TextStyleRange, Text_style, Text_border

|

||||

from util import hex_to_rgb

|

||||

|

||||

import shutil

|

||||

import os

|

||||

|

||||

# Base URL of the service, please modify according to actual situation

|

||||

BASE_URL = f"http://localhost:{PORT}"

|

||||

@@ -160,7 +162,7 @@ def add_text_impl(text, start, end, font, font_color, font_size, track_name, dra

|

||||

|

||||

return make_request("add_text", data)

|

||||

|

||||

def add_image_impl(image_url, width, height, start, end, track_name, draft_id=None,

|

||||

def add_image_impl(image_url, start, end, width=None, height=None, track_name="image_main", draft_id=None,

|

||||